Getting the Most Out of AI Growth Labs

Transform the NHS and Beat Reform

Summary

When running sandboxes, provide transparent and objective access criteria, provide clear consumer disclaimers and transparently report on progress to diffuse knowledge amongst firms and regulators, to ensure that sandbox participants are not unfairly advantaged.

To ensure support for the entire range of possible innovations, do not narrowly pre-specify the regulations that could be relaxed within sandboxes.

When selecting areas in which to run sandboxes, focus on safety-critical sectors with stringent and redundant process regulation. Harness information from sandboxes to design advance regulation and deregulation commitments in these sectors.

Focus on healthcare for both productivity benefits and political benefits.

Within healthcare, deploy sandboxes for AI-assisted physiotherapy and cognitive behavioural therapy, to tackle economic inactivity due to long term sickness.

Deploy a sandbox for using AI agents in monitoring and evaluation / clinical audits, to enable better management decisions at the local level, and to help the Department for Health and Social Care to build a “strategic brain” to inform decision making.

Prioritise working with small “district general hospitals” in Britain’s left behind towns, rather than large tertiary centres in London.

Work with professional regulators like the General Medical Council to ensure all major bottlenecks to AI adoption are tackled.

Develop a secondment model to diffuse knowledge across regulators and AI Growth Labs.

Consider developing sandboxes in areas where Britain has existing comparative advantages, like quality control for alternative proteins and vaccines.

Last month, Britain’s Science and Tech Secretary Liz Kendall announced AI Growth Labs, a cross-government program which seems to focus on “regulatory sandboxes”, where companies can test products under supervision, with specific regulations temporarily relaxed.

If executed well, this is exactly the kind of policy that will increase economic growth, accelerate AI adoption in public services, and play a quiet but significant role in helping Labour beat Reform in 2029.

AI and the Case for Regulatory Sandboxes

Regulatory sandboxes are a recent innovation, pioneered by Britain in 2016 through the FCA’s Fintech Sandbox.

The economic case for them is as follows: process-based regulation is often necessary to minimise harmful outcomes. But any process-based regulation will inherently be designed for the processes of yesterday, and regulators cannot predict the new processes that innovators will create.

When innovation leads to outcomes which would satisfy regulators, but through new processes, their adoption may be hampered by outdated process regulations. In other cases, innovation may simply change the cost-benefit profile of regulation, strenghtening the case for relaxing some regulations and tightening others.

Regulatory sandboxes help regulators better understand how innovative products work in the real world, and help companies understand the expectations of regulators. This means regulators can make better-informed adaptations to regulation, and companies can make better-informed adaptations to products.

Ultimately, regulatory sandboxes mean that innovative products get to market faster, delivering better products for consumers, higher wages for workers and more investment for start-ups, helping them to scale.

The case for regulatory sandboxes was already strong. But when you consider the rapid pace of AI innovation, alongside the ability of AI to speed up innovation itself, the case becomes overwhelming.

Regulatory sandboxes might also be vital in the competition between states to capture the economic value from AI. Countries that safely accelerate AI adoption will achieve more economic growth, be more likely to scale billion-dollar AI companies, and capture more tax revenue to fund strong public services.

The Case Against Sandboxes

To execute sandboxes well, it is important to understand the case against them, and mitigate weaknesses.

Sandboxes create an opportunity cost: instead of broad improvement in regulator performance, money is spent on improving performance with respect to a handful of companies or within a particular area of innovation. Additionally, there are risks of regulatory capture, and government intervention unfairly favouring firms that enter the sandbox over firms that do not.

I worked on the page on regulatory sandboxes within the Centre for British Progress’s R&D Policy Toolkit. There, drawing on economic theory and evidence, we offer specific recommendations to tackle this risk - transparent and objective access criteria, clear consumer disclaimers, and transparent reporting on progress to diffuse knowledge amongst firms and regulators.

We also recommend that sandboxes should not narrowly pre-specify which regulations could be relaxed, helping them to be technology-neutral and support a broader range of innovation.

What Should AI Growth Labs Focus On?

Since one of the downsides of a sandbox is that that it can only narrowly focus on one area of innovation, these areas must be selected carefully to unlock the greatest productivity gains. While the teams working on AI Growth Labs will need to consider many factors to determine their priorities, I suggest a few focuses here.

One is where regulatory sandboxes may be better suited than alternative innovation policy tools - safety-critical sectors.

One is a specific safety-critical sector which is also politically salient, and based on my experiences, particularly amenable to productivity improvements from AI - healthcare.

The last is relevant to all innovation policy - areas of existing comparative advantage.

Safety Critical Sectors

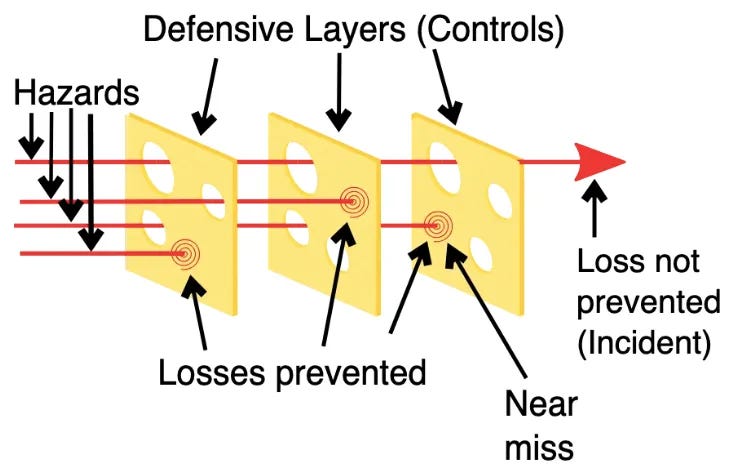

The case for sandboxes is strongest where process regulation is most stringent. In safety-critical domains like healthcare, nuclear, aviation and pharmaceuticals manufacturing, we care deeply about minimising risk. This leads to extensive layers of stringent process regulation, including layers which are intentionally redundant, in line with the “Swiss Cheese Model”. But as companies invent better AI tools, lower-cost approaches to minimise risk may emerge, and cost-benefit profiles of regulation may radically change.

The Swiss Cheese Model. Source: An Australian and New Zealand Human Resource Management Guide to Work Health and Safety, Open Education Resources Collective.

Nuclear power is already a government priority, to deliver cheap, green and firm power - vital to both the goal of reaching Net Zero by 2050, and the government’s ambition to be an “AI Maker”. After the Nuclear Regulatory Taskforce makes its recommendations, AI Growth Labs can further improve our approach to nuclear, with a programme targeted at firms developing AI technologies which might reduce the costs and time it takes to get nuclear power stations online.

The government is also currently looking for ways to retain more life sciences investment. Working with companies using AI to find efficiencies in areas like pharmaceutical manufacturing could contribute to this, while getting the NHS more bang for its buck on medications spending, which is likely to rise.

AI Growth Labs could also harness evidence from sandboxes to design advance regulation and deregulation commitments, which may be most useful in safety-critical sectors.

For example, where a process regulation for nuclear power significantly raises costs, the government could commit in advance to relaxing it once AI innovations improve safety elsewhere - an advance deregulation commitment. Or, where AI innovations might significantly improve pharmaceutical manufacturing safety with minimal cost, the government could commit in advance to mandating the use of such innovations - an advance regulation commitment.

Advance commitments could complement sandboxes by reducing regulatory uncertainty at a broader, market-wide level, crowding in more private R&D investment and helping our start-ups to scale.

Healthcare is a safety critical sector, with extensive layers of regulation to protect safety and privacy. But it is also particularly amenable to AI-assisted productivity improvements, and holds Labour’s key to beating Reform in 2029.

AI Can Transform Healthcare, but Diagnosis is a Distraction

When I talk to people in politics about AI in healthcare (including at a roundtable hosted by the previous AI minister), I’ve noticed a widespread tendency to assume that I’m talking about AI-assisted diagnosis.

This misunderstands how healthcare systems work. LLM-assisted dynamic questionnaires are likely to eventually save time for doctors in general practice and emergency care, but most healthcare consumption in the UK is by elderly patients with progressive or relapsing chronic, long-term diseases which are already diagnosed. As an example, I have contributed to only two or three diagnoses in my three months working as a doctor so far. There is a ceiling effect which will limit productivity gains from AI-assisted diagnosis, meaning this area should not be a priority for AI Growth Labs.

The government has correctly identified AI-assisted discharge summaries - letters sent from hospitals to a patient’s GP when the patient is discharged from hospital - as a opportunity within the “AI Examplars” programme. As these tools are rolled out nationwide, they might save resident doctors like me 15-30 minutes every day, giving us more time with our patients. But more importantly, they tackle a key bottleneck in patient flow, helping patients move out of the hospital and into the community quicker - a priority for raising NHS productivity.

There are other promising opportunities too.

AI physiotherapy trials have cut waiting times, and evidence supporting AI-assisted cognitive behavioural therapy (CBT) continues to emerge. These treatments target conditions which play the strongest roles in reducing national productivity - musculoskeletal and mental health issues account for around 50% of all conditions reported by people who are economically inactive due to long-term sickness, so tackling regulatory barriers to AI adoption in these areas will be critical for the Department for Health and Social Care (DHSC)’s ambition to be a “growth department”.

Additionally, AI physiotherapy could free up time for physiotherapists to assess patients’ mobility needs, also helping get patients out of the hospital and into the community. And beyond mental health disorders, AI-assisted CBT has promising applications for health prevention, including for smoking cessation and diabetes management.

A Strategic Brain for DHSC

Monitoring and evaluation in healthcare, a “structured management practice” highlighted as important to productivity by economist and advisor to the Chancellor John Van Reenen, provides a particularly exciting opportunity.

In the last two months, I’ve completed three “clinical audits” at my hospital. These are exercises where doctors assess local performance on specific metrics against national standards, and identify opportunities for improvement. We usually do this by going through the medical records of small, unsystematic samples of patients, at regular intervals ranging from a few months to a year. This is important, useful and time-efficient, but this approach does not provide the most accurate or up-to-date information, so does not enable the best management decisions

Tools incorporating AI agents could replace this approach with a real-time, continuous census looking at all relevant patients. This would help us assess performance more accurately, and at greater definition and scale, freeing up doctors for other tasks while enabling better management decisions at every level, from local to national. It could offer the Department for Health and Social Care a true “strategic brain”, with an unprecedented quality of data to inform decision-making. But for now, questions remain around what it means for AI agents to access patient data, and how this can be done in a manner that protects confidentiality. A targeted sandbox could help answer these.

Professional Regulation is Key to AI Adoption in Healthcare

To accelerate AI adoption in the NHS, we need to reform professional regulation - not just product regulation. Even if AI Growth Labs works with the MHRA to reform regulatory barriers for companies, barriers imposed by professional regulators will continue to discourage individual clinicians from actually using the AI tools that their bosses procure, while also discouraging the “bottom up” adoption of secure AI tools.

The optimal approach to regulating professionals in industries like medicine, nursing, law and teaching will look different in an AI-enabled world. To make our public services more productive, we may need to relax regulations on workers in some contexts.

But because AI diffusion and adoption happens via both top-down procurement by managers and bottom-up adoption by workers, we may also need to tighten regulations around AI in other contexts, to ensure workers adopt “bottom-up” AI safely, appropriately and effectively.

This means AI Growth Labs needs to work closely with professional regulators, including the General Medical Council and the Nursing and Midwifery Council. Without tackling this key bottleneck to the use of AI tools, there is a risk that sandboxes will deliver minimal productivity improvements.

Practically, AI Growth Labs should implement a secondment model - where staff from regulators, including professional regulators - spend stints working with AI Growth Labs teams, helping to diffuse domain-specific and tacit knowledge.

An AI-Enabled NHS Could Help Beat Reform

Research by the Good Growth Foundation shows that fixing the NHS is a key priority for Labour-Reform switchers. An NHS transformed with AI, which helps patients see their doctors more quickly and gives doctors more time with their patients, could be key to bringing voters back to Labour in 2029. Without sandboxes, it would be difficult to make this happen in a sector with so much process regulation.

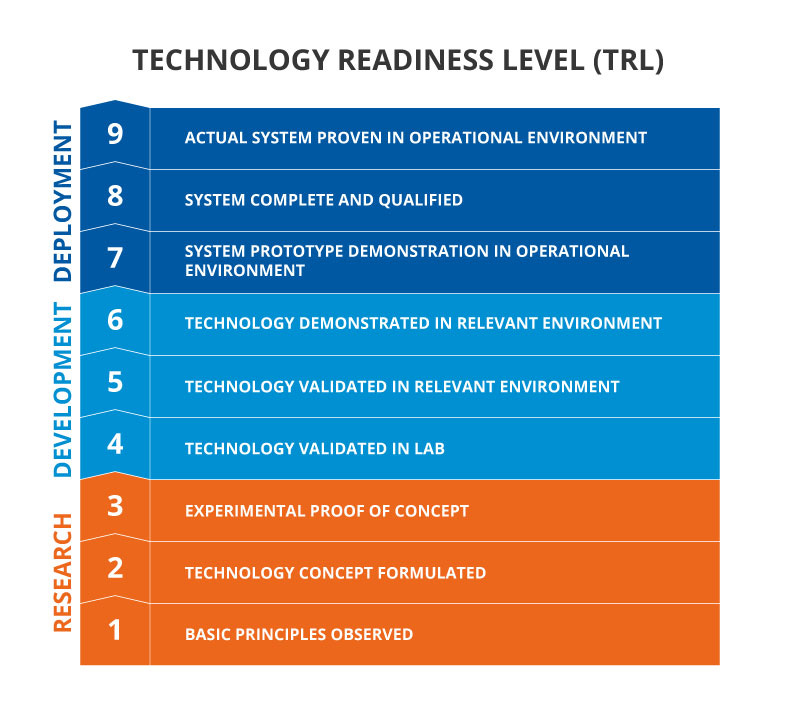

Fortunately, compared to alternative innovation policy tools, sandboxes are particularly well-suited to innovations that are close to market, or at a high “technology readiness level” (TRL). Many AI applications in healthcare and public administration are already at TRL 7-9. Fundamental research isn’t needed - products just need to be deployed. If sandboxes are up and running quickly, widespread AI in the NHS by 2029 is absolutely possible.

Technology Readiness Levels. Source: What are Technology Readiness Levels (TRL)? The Welding Institute.

However, it’s important that the government doesn’t only work with the usual suspects in London, Oxford and Cambridge when running these sandboxes. Talk of an AI-enabled NHS in London risks alienating Labour-Reform switchers living in parts of the country where the local A&E department still hasn’t adopted electronic patient records.

AI Growth Labs should prioritise working with small, “district general hospitals” in Britain’s left behind towns and our most deprived areas, making sure these places aren’t left behind like they were with the digital revolution. Because of the impact of deprivation on health, these are the hospitals where staff are the most overstretched, and might benefit most from small savings to their time.

Existing Comparative Advantages

AI Growth Labs could also accelerate AI adoption in sectors where Britain already has a comparative advantage. Given our strengths in AI, we might expect to have a comparative advantage at the intersection of AI and these sectors, helping us build new successful scale-ups, while rapid AI adoption could help maintain our lead within these sectors.

For example, British companies are close to the global frontier when it comes to plant-based and cultivated meat alternatives, with many of these companies based in the North East and Yorkshire. Britain also has particular strengths in vaccines, with Liverpool being home to manufacturing sites for companies like CSL Seqirus and AstraZeneca.

Sandboxes could accelerate the adoption of AI-assisted quality control in both sectors, which would help us build new AI-focused scale-ups and help existing British firms beat competitors abroad, bringing more jobs and growth to Britain.

AI Growth Labs, then, could be DSIT’s greatest contribution to national renewal and the battle against Reform. If this program is executed well, Labour could enter 2029 having brought AI-driven productivity growth to the NHS in every corner of the country, having delivered a drastic reduction to the cost of building and deploying nuclear power, and having ensured that the AI companies of the future are built here in Britain - an AI Maker, and an AI Taker.

Written in a purely personal capacity - my views are my own and do not reflect those of my employer, or any organisation that I am affiliated to.

I use this Substack for rough thinking around policy and politics, rather than finalised policy proposals. If you disagree with ideas in this post, think I’ve missed something obvious or have any other feedback, I’d love to hear from you!